THIS MATERIAL IS A MARKETING COMMUNICATION.

LiDAR vs. Camera Only – What is the best sensor suite combination for full autonomous driving?

The autonomous driving industry has been exploring different combinations of sensors to support the development of full self-driving system. In this article we will examine and compare different approaches to sensor suite set up.

Mainstream sensor combination

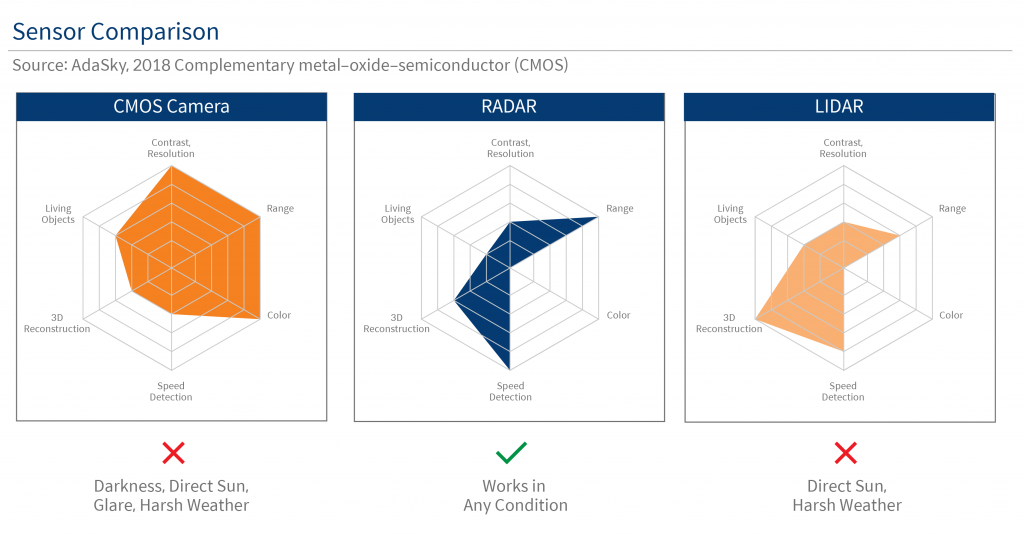

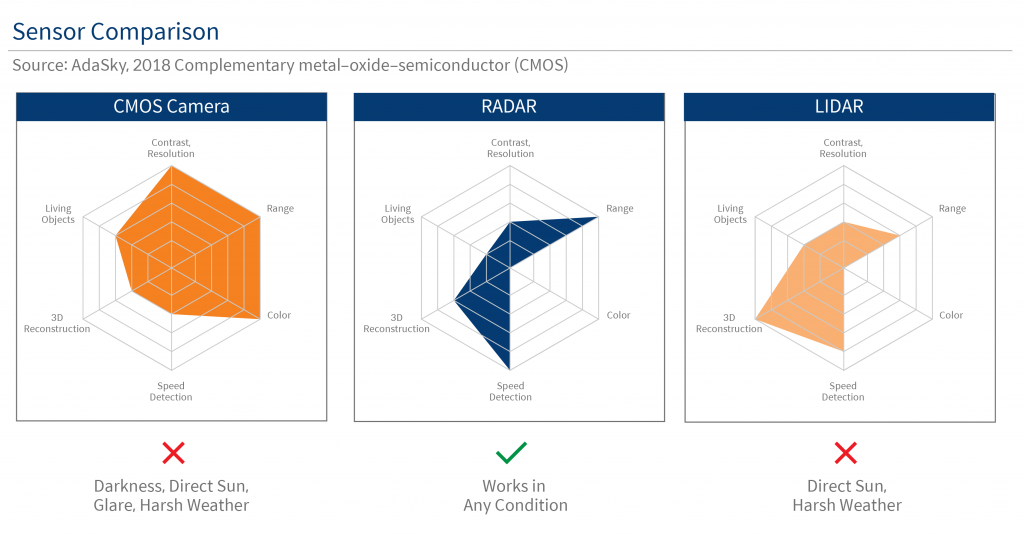

Mainstream sensor suite includes a combination of LiDAR, camera and Radar. Waymo, for example, is one of the leading autonomous driving companies which include LiDAR in its sensor suite. The company launched its 5th generation platform in March 2020, which has an in-house developed 360 LiDAR that provides high-resolution 3D pictures of its surroundings. It has four perimeter LiDAR placed at four points around the sides of the vehicle, offering unparalleled coverage with a wide field of view to detect objects close by. These short-range LiDAR provide enhanced spatial resolution and accuracy to navigate tight gaps in city traffic and cover potential blind spots. There are 29 cameras on board to help with surrounding understanding. The vehicle is also equipped with six radars which can detect objects at greater distances with a wider field of view.

Full autonomous driving with LiDAR:

Superior low light performance. LiDAR carries its own light source, and its performance is not impacted under low light situation. Even in complete darkness, LiDAR works perfectly.

More accurate 3D measurement. Human eyes are able to measure distance by triangulating the distance between two eyes and the object. We are also able to recover 3D environment measurements with a camera only setup. However, LiDAR can offer a more accurate annotation of 3D environment, and thus can help boost confidence of the autonomous driving system

Full autonomous driving without LiDAR:

Tesla is one of the leading companies in autonomous driving system development which does not include LiDAR in its sensor suite. The company selects a combination of eight external cameras, one radar, and 12 ultrasonic sensors for the system.(Waymo 2020) Arguments for not having LiDAR include:

LiDAR faces same penetration handicap as cameras. LiDAR falls between the visible and infrared wave length and is closer to the former, which means its laser bounces around in a similar way as visible light (what the camera is collecting). Under heavy fog/rain situations, LiDAR will also be impacted as laser at that wavelength cannot penetrate fog/rain or anything that blocks viable light, which is similar to what happens to cameras.

LiDAR provides limited information compared to cameras. Modern driving environment is designed for vision-based systems (i.e. human). LiDAR presents a precise 3D measurement of the surroundings without information like colour and text, which as a result is not sufficient to provide a good understanding of the surroundings. Information collected by cameras, on the other hand, contains richer information which can be interpreted by well-trained neutral nets.

Our take:

Companies choose LiDAR as it provides more accurate 3D measurement. From the arguments above we can see that one key problem for full autonomous driving is to retrieve 3D environment data from 2D images. This requires a well-trained machine learning model, which makes it operate as well as humans in understanding driving conditions at all times. Without significant amount of well-annotated quality data, especially data on long tail scenarios, it is impossible to train such models. The problem for most companies, including Waymo, is that they have no access to sufficient real world annotated data at the current volume, and what they choose to do for now is to adopt LiDAR in their sensor suite combination to make up for this shortcoming.

Full autonomous driving may be achieved earlier with LiDAR. Waymo has been operating without safety drivers in Phoenix since 2017,(Waymo 2018) while Tesla only launched its full self-driving beta in the US at the end of 2020.(Tesla 2020) Waymo largely focuses its testing in the US whereas tesla vehicles testings are all over the world. Waymo’s approach with high precision map and LiDAR will enable it to remove safety drivers sooner in areas which are well mapped/trained by the company.

Both combination of sensor will achieve full autonomy. Waymo has reached the end of the road in Phoenix and the remaining challenge is to replicate that in more cities/areas. We think vision ML (Machine learning) models will eventually be mature enough for full autonomous driving. For Tesla, we think it will be able to roll out full autonomous driving at a much faster speed once the model is mature, as the company collects significantly more data from a much more diverse environment all over the world.

LiDAR vs. Camera Only – What is the best sensor suite combination for full autonomous driving?

The autonomous driving industry has been exploring different combinations of sensors to support the development of full self-driving system. In this article we will examine and compare different approaches to sensor suite set up.

Mainstream sensor combination

Mainstream sensor suite includes a combination of LiDAR, camera and Radar. Waymo, for example, is one of the leading autonomous driving companies which include LiDAR in its sensor suite. The company launched its 5th generation platform in March 2020, which has an in-house developed 360 LiDAR that provides high-resolution 3D pictures of its surroundings. It has four perimeter LiDAR placed at four points around the sides of the vehicle, offering unparalleled coverage with a wide field of view to detect objects close by. These short-range LiDAR provide enhanced spatial resolution and accuracy to navigate tight gaps in city traffic and cover potential blind spots. There are 29 cameras on board to help with surrounding understanding. The vehicle is also equipped with six radars which can detect objects at greater distances with a wider field of view.

Full autonomous driving with LiDAR:

Superior low light performance. LiDAR carries its own light source, and its performance is not impacted under low light situation. Even in complete darkness, LiDAR works perfectly.

More accurate 3D measurement. Human eyes are able to measure distance by triangulating the distance between two eyes and the object. We are also able to recover 3D environment measurements with a camera only setup. However, LiDAR can offer a more accurate annotation of 3D environment, and thus can help boost confidence of the autonomous driving system

Full autonomous driving without LiDAR:

Tesla is one of the leading companies in autonomous driving system development which does not include LiDAR in its sensor suite. The company selects a combination of eight external cameras, one radar, and 12 ultrasonic sensors for the system.(Waymo 2020) Arguments for not having LiDAR include:

LiDAR faces same penetration handicap as cameras. LiDAR falls between the visible and infrared wave length and is closer to the former, which means its laser bounces around in a similar way as visible light (what the camera is collecting). Under heavy fog/rain situations, LiDAR will also be impacted as laser at that wavelength cannot penetrate fog/rain or anything that blocks viable light, which is similar to what happens to cameras.

LiDAR provides limited information compared to cameras. Modern driving environment is designed for vision-based systems (i.e. human). LiDAR presents a precise 3D measurement of the surroundings without information like colour and text, which as a result is not sufficient to provide a good understanding of the surroundings. Information collected by cameras, on the other hand, contains richer information which can be interpreted by well-trained neutral nets.

Our take:

Companies choose LiDAR as it provides more accurate 3D measurement. From the arguments above we can see that one key problem for full autonomous driving is to retrieve 3D environment data from 2D images. This requires a well-trained machine learning model, which makes it operate as well as humans in understanding driving conditions at all times. Without significant amount of well-annotated quality data, especially data on long tail scenarios, it is impossible to train such models. The problem for most companies, including Waymo, is that they have no access to sufficient real world annotated data at the current volume, and what they choose to do for now is to adopt LiDAR in their sensor suite combination to make up for this shortcoming.

Full autonomous driving may be achieved earlier with LiDAR. Waymo has been operating without safety drivers in Phoenix since 2017,(Waymo 2018) while Tesla only launched its full self-driving beta in the US at the end of 2020.(Tesla 2020) Waymo largely focuses its testing in the US whereas tesla vehicles testings are all over the world. Waymo’s approach with high precision map and LiDAR will enable it to remove safety drivers sooner in areas which are well mapped/trained by the company.

Both combination of sensor will achieve full autonomy. Waymo has reached the end of the road in Phoenix and the remaining challenge is to replicate that in more cities/areas. We think vision ML (Machine learning) models will eventually be mature enough for full autonomous driving. For Tesla, we think it will be able to roll out full autonomous driving at a much faster speed once the model is mature, as the company collects significantly more data from a much more diverse environment all over the world.

Disclaimer & Information for Investors

No distribution, solicitation or advice: This document is provided for information and illustrative purposes and is intended for your use only. It is not a solicitation, offer or recommendation to buy or sell any security or other financial instrument. The information contained in this document has been provided as a general market commentary only and does not constitute any form of regulated financial advice, legal, tax or other regulated service.

The views and information discussed or referred in this document are as of the date of publication. Certain of the statements contained in this document are statements of future expectations and other forward-looking statements. Views, opinions and estimates may change without notice and are based on a number of assumptions which may or may not eventuate or prove to be accurate. Actual results, performance or events may differ materially from those in such statements. In addition, the opinions expressed may differ from those of other Mirae Asset Global Investments’ investment professionals.

Investment involves risk: Past performance is not indicative of future performance. It cannot be guaranteed that the performance of the Fund will generate a return and there may be circumstances where no return is generated or the amount invested is lost. It may not be suitable for persons unfamiliar with the underlying securities or who are unwilling or unable to bear the risk of loss and ownership of such investment. Before making any investment decision, investors should read the Prospectus for details and the risk factors. Investors should ensure they fully understand the risks associated with the Fund and should also consider their own investment objective and risk tolerance level. Investors are advised to seek independent professional advice before making any investment.

Sources: Information and opinions presented in this document have been obtained or derived from sources which in the opinion of Mirae Asset Global Investments (“MAGI”) are reliable, but we make no representation as to their accuracy or completeness. We accept no liability for a loss arising from the use of this document.

Products, services and information may not be available in your jurisdiction and may be offered by affiliates, subsidiaries and/or distributors of MAGI as stipulated by local laws and regulations. Please consult with your professional adviser for further information on the availability of products and services within your jurisdiction. This document is issued by Mirae Asset Global Investments (HK) Limited and has not been reviewed by the Securities and Futures Commission.

Information for EU investors pursuant to Regulation (EU) 2019/1156: This document is a marketing communication and is intended for Professional Investors only. A Prospectus is available for the Mirae Asset Global Discovery Fund (the “Company”) a société d'investissement à capital variable (SICAV) domiciled in Luxembourg structured as an umbrella with a number of sub-funds. Key Investor Information Documents (“KIIDs”) are available for each share class of each of the sub-funds of the Company.

The Company’s Prospectus and the KIIDs can be obtained from www.am.miraeasset.eu/fund-literature . The Prospectus is available in English, French, German, and Danish, while the KIIDs are available in one of the official languages of each of the EU Member States into which each sub-fund has been notified for marketing under the Directive 2009/65/EC (the “UCITS Directive”). Please refer to the Prospectus and the KIID before making any final investment decisions.

A summary of investor rights is available in English from www.am.miraeasset.eu/investor-rights-summary.

The sub-funds of the Company are currently notified for marketing into a number of EU Member States under the UCITS Directive. FundRock Management Company can terminate such notifications for any share class and/or sub-fund of the Company at any time using the process contained in Article 93a of the UCITS Directive.

Australia: The information contained in this document is provided by Mirae Asset Global Investments (HK) Limited (“MAGIHK”), which is exempted from the requirement to hold an Australian financial services license under the Corporations Act 2001 (Cth) (Corporations Act) pursuant to ASIC Class Order 03/1103 (Class Order) in respect of the financial services it provides to wholesale clients (as defined in the Corporations Act) in Australia. MAGIHK is regulated by the Securities and Futures Commission of Hong Kong under Hong Kong laws, which differ from Australian laws. Pursuant to the Class Order, this document and any information regarding MAGIHK and its products is strictly provided to and intended for Australian wholesale clients only. The contents of this document is prepared by Mirae Asset Global Investments (HK) Limited and has not been reviewed by the Australian Investments & Securities Commission.

Copyright 2021. All rights reserved. No part of this document may be reproduced in any form, or referred to in any other publication, without express written permission of Mirae Asset Global Investments (Hong Kong) Limited.